Honey, AI Shrunk the Archive

IEEE/UMaine AI teleconference

Excerpt playing: (choose from the outline below)

Introduction

00:00 Ali Abedi introduces the webinar

01:09 The promise and threat of AI-driven archives

Audience survey on appropriate uses of AI

Ethical concerns about AI in general

AI's impact on the archive

05:33 From shelves to databases to AI models

07:00 "AI is an archive in reverse"—Eryk Salvaggio

Animistic AI metaphors

08:05 Why animate metaphors are misleading.

The problem with both pro-AI (superbeing) and anti-AI (stochastic parrots)

Heterogeneous versus homogenous complexity

Training a model versus training a baby

Mechanistic AI analogs

14:28 AI as compression algorithm

17:49 gzip beats BERT as a text classifier!

Large language models have thermodynamic properties that help us tune them

20:53 Adjusting temperature to tune AI output

Audience questions

25:54 Question: can original data be retrieved accurately from AI compression?

28:46 Question: how appropriate is the term "hallucination" for false outputs?

32:15 Question: why don't models admit when they don't know?

33:20 Question: is confabulation a better term than hallucination?

33:45 Ali Abedi: generative AI is the latest stage in compression

From analog sensory data to digital sampling to sophisticated compression

37:37 Question: is there a fair trade AI comparable to fair trade coffee?

Consequences of mechanistic analogy

38:04 Adjusting energy to tune AI output

39:55 Surprises of probability: the importance of density and temperature

The chance of your next lungful containing a molecule of Julius Caesar's dying breath is 98%!

45:09 Mistuned AI: insufficient density (obituary spam)

46:20 Mistuned AI: insufficient temperature (AI missing tumors)

47:00 Mistuned AI: too much temperature (bogus court cases)

47:20 Audience survey on density and variability for four tasks

Using ChatGPT to generate a chart to visualize audience results

53:10 Perceived best density and temperature for four tasks

- Commenting on an essay

- Grading an essay

- Summarizing a meeting

- Representing you at a meeting

55:55 Using thermodynamics to predict which tasks will succeed or fail

What the available density and temperature tell us

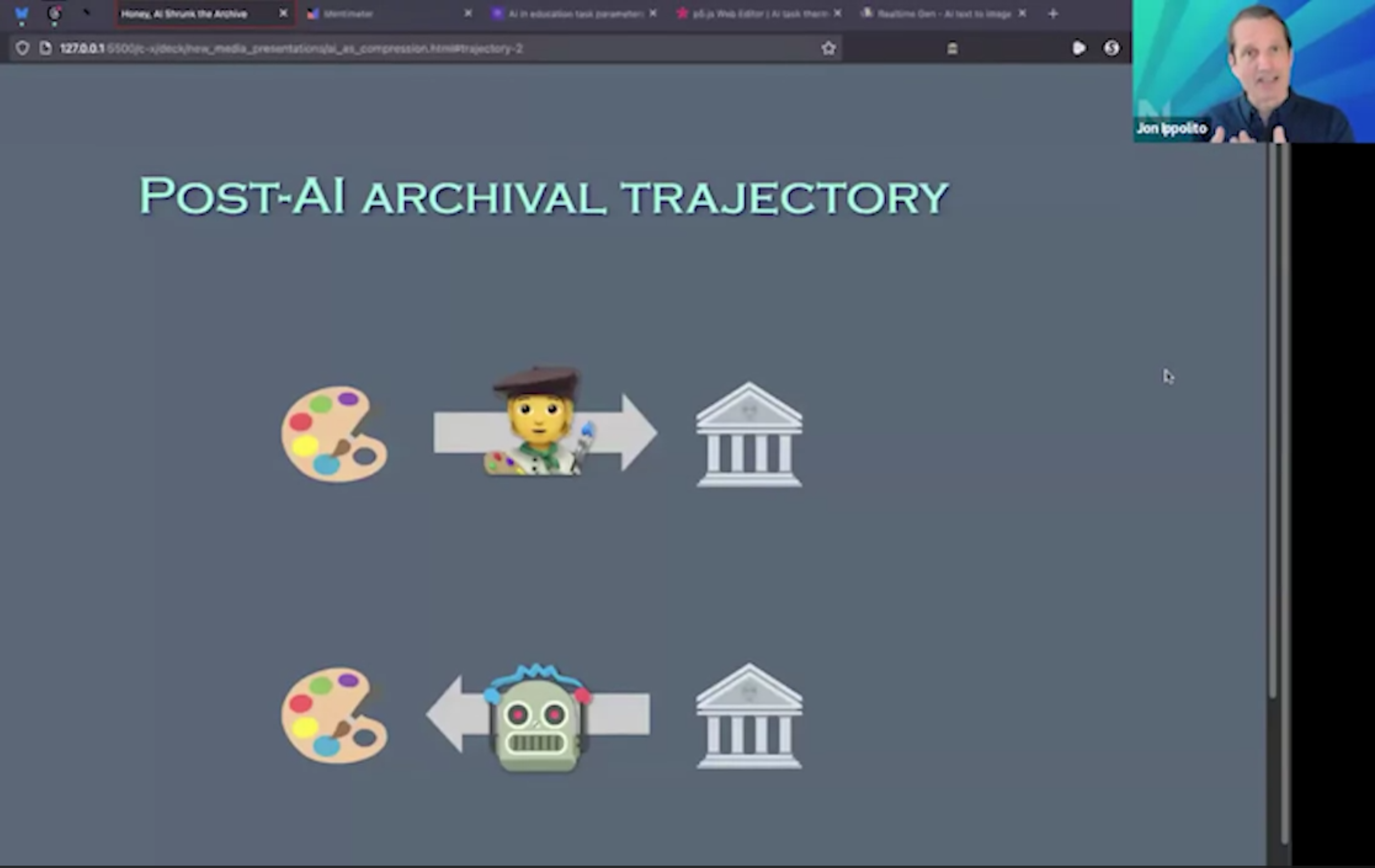

Warping the archival trajectory

56:44 AI improving access to collections

Using AI to find items in the Rijksmuseum collection

59:01 AI replacing collections

Leonardo.ai's realtime image generation

Will users post a real photo of the Colosseum when they can more easily generate one?

62:12 Replacing the entire web with Websim.ai

"Capy-bara-dise Getaways"

65:31 Replacing actual artists' websites

66:11 From the archive in reverse to the archival loop

New derivatives become the next training data, gradually losing fidelity due to compression

68:10 Conclusion: why mechanistic analogs lead to better insights than animate metaphors

The compression analogy helps explain what is good and bad about large language models

The thermodynamic analogy helps us tune models to specific uses

This teleconference is a project of the University of Maine's Digital Curation program. For more information, contact ude.eniam@otiloppij.

Timecodes are in minutes: seconds

Whether wielded by AI critics or champions, animistic metaphors like stochastic parrots and godlike oracles obscure important dynamics of large language models. Drawing on insights from the science of thermodynamics and software compression, this talk by New Media professor Jon Ippolito explores what mechanistic comparisons reveal about generative AI that are concealed by analogies to living beings.

Just as a JPEG discards fine details to shrink an image, large language models smooth over anomalies and erase outliers. Understanding how compression works can help us apply AI effectively while avoiding a future where knowledge is not stored but continually rewritten, and where the archive itself risks being compressed out of existence.

This talk from 3 April 2025 was moderated by Ali Abedi of UMaine AI and hosted by IEEE in conjunction with UMaine's New Media and Digital Curation programs, which regularly organize teleconferences about topics on the cutting edge of digital culture. For a text version of this talk, see "Honey, AI Shrunk the Archive: AI as Compression Algorithm," forthcoming from Bloomsbury Press.

Watch the entire video or choose an excerpt from the menu on this page.

Or view more teleconferences from the Digital Curation program.