00:00 Big changes are coming (Boothroyd)

2:34 DALLE 1 (2021) from OpenAI

🔗 DALL-E 2 is the most painterly of current generators.

Potentially useful for commercial illustration or puppet animation in tools like After Effects.

9:14 Midjourney's distinct qualities

🔗 Midjourney looks more like a hyperrealistic photograph, whereas DALLE looks more handmade.

Most popular, perhaps because it has the least guardrails.

11:41 How to use Midjourney in Discord

15:11: Are all Midjourney prompts public? (Heracles Papatheodorou)

16:08 Stable Diffusion's distinct qualities

🔗 Stable Diffusion reflects more "humanistic" aesthetics, useful for a stat card or game mechanic.

Open source, so can run locally or via services like DreamStudio with a free trial or paid credits.

DreamStudio lets you to choose styles like anime, digital art, photographic, or comic book.

20:46 Deep fakes and guardrails

Stable Diffusion doesn't have safeguards against taboos like sex, alcohol, celebrity images

22:27 Prompt engineering as a field

Can be discouraging to see an AI accomplish 10 hours of work in 10 seconds.

Roles may be moving away from skill-based renderer to an art producer.

24:47 Are there markers to identify fakes? (Rebecca Ruggiero)

25:27 Legal challenges based on source material

You can see the Shutterstock logo in some of the imagery.

26:33 Training on public domain images? (Mike Hanley)

🔗 Adobe Firefly is supposed to be trained only on images it owns.

27:56: Can you use AI-generated images commercially? (Celina Lage)

29:39 US Copyright Office rejection of copyright policy

30:16 Are these skills being taught in high school? (Ruggiero)

31:20 Text-to-video is still primitive

AI-generated Will Smith and Conan O'Brien videos.

34:03 Video-to-animation is promising

🔗 Corridor Crew's anime filter shows the potential of automated rotoscoping.

37:22 Rapid improvement in generators (Hanley)

🔗 Runway is a service to create very short videos from text.

41:43 AI voice generation will endanger jobs for advertisement voice actors

42:09 AI will create future roles for talented people who know design, filmmaking, production

44:14 Personalized entertainment generated by viewers (Hanley)

"We're going to have more content than we know what to do with." (Boothroyd)

46:18 Will streaming services like Spotify and Netflix survive? (Vijayanta Jain)

47:05 Synthesized characters, like an entire series with young Indiana Jones

47:30 Access and cost (Ruggiero)

48:53 Labor impact (Liam Dworkin)

"Eight people working in production now will become three people working with AI" (Boothroyd)

49:52 Desktop publishing as precedent (Peter Schilling)

51:46 Does AI help with writing scripts, eg for After Effects? (Scot Gresham-Lancaster)

53:33 Can creators benefit from applying AI to the logistic side of their business? (Ippolito)

This presentation was recorded by the University of Maine's New Media program. For more information, contact ude.eniam@otiloppij.

Timecodes are in minutes seconds

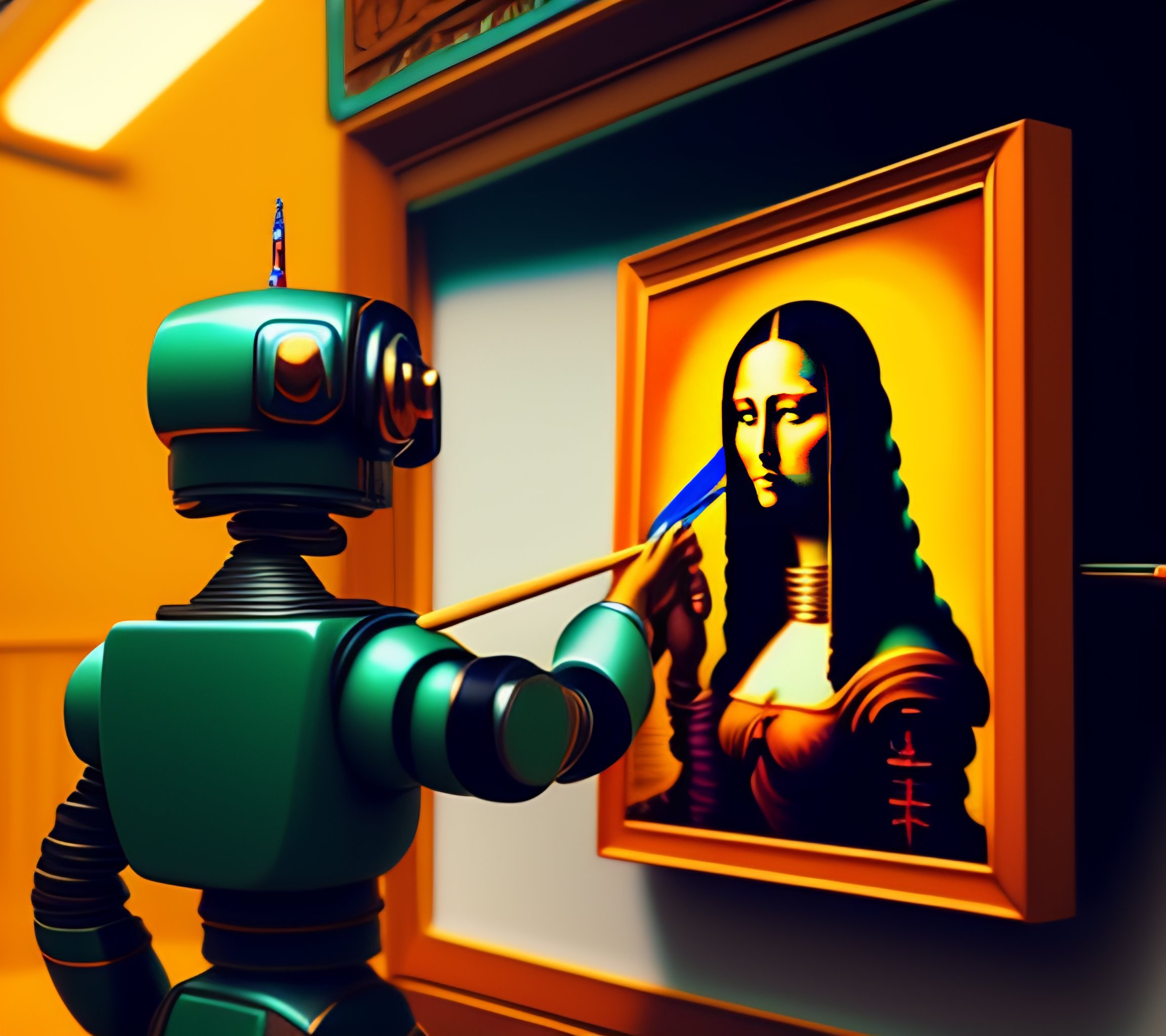

Generative AI has been expanding the creative possibilities of art and design well before text generators like ChatGPT caught the world's attention. Given nothing more than a few well-chosen phrases, software like DALLE-2, Stable Diffusion, and Midjourney can quickly generate never-before seen images that are outlandish or realistic, in styles ranging from photographic to cartoonish. This workshop demos a handful of these tools and entertains a discussion about how they might change art education as well as work prospects for creative professionals.

The webinar is led by Aaron Boothroyd, a Lecturer of New Media in the School of Computing and Information Science who is co-founder of UMaine's larger Learning With AI initiative. Boothroyd teaches courses on 3-D modeling, animation, digital art, special effects, and game design, and has produced work for clients such as Google, Frito-Lay, and The Jackson Laboratory.

This is part of a series of free webinars on cutting-edge technologies offered by the University of Maine's New Media program, which teaches animation, digital storytelling, gaming, music, physical computing, video, and web and app development. These are not Powerpoint lectures but guided demonstrations that students can follow at school or at home on their laptops. Learn more about these webinars or UMaine's Learning With AI initiative.

Watch the entire video or choose an excerpt from the menu on this page. (Image generated by Aaron Boothroyd with Stable Diffusion.)